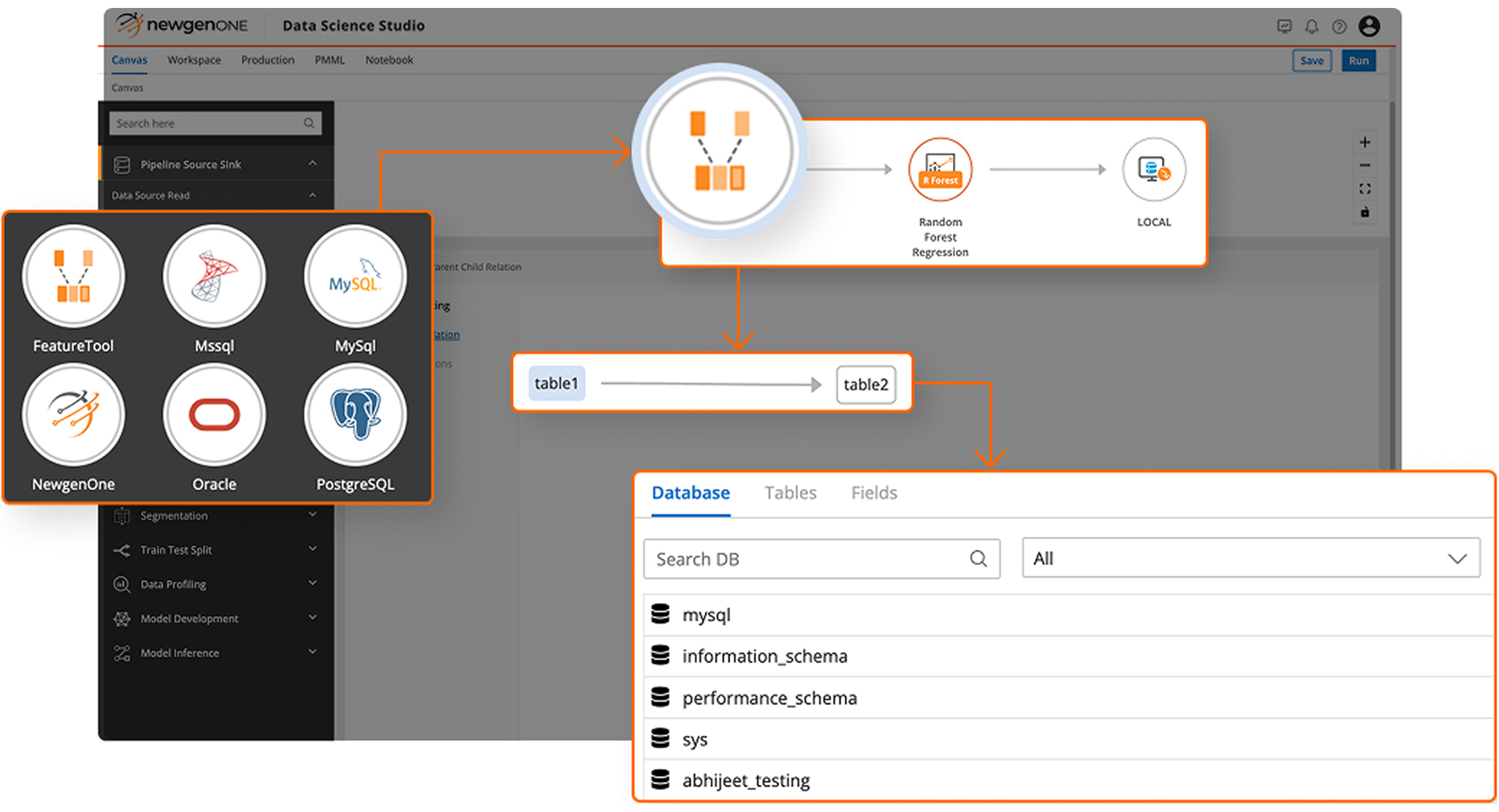

Leverage an intuitive visual interface to prepare data at enterprise scale for analytics and machine learning initiatives. Improve productivity and trust by validating data for accuracy and consistency, while performing core data operations, such as blending, curating, and wrangling, to securely build and scale reliable data pipelines using in-built connectors.

Enterprise-grade Capabilities of NewgenONE Data Fusion

Blend and Curate Enterprise Data with Ease

Wrangle Data to Build and Schedule Intelligent Data Pipelines

Handle Enterprise-scale Data Efficiently

Scale Data Pipelines with In-built Data Connectors

Blend and Curate Enterprise Data with Ease

- Connect to diverse enterprise data sources including NoSQL and relational databases, BLOB storage, and real-time queues using in-built connectors.

- Prepare and curate data visually by applying joins across multiple datasets and data sources to create unified, analysis-ready datasets.

- Process massive-scale data efficiently using advanced, distributed extract, transform, and load (ETL) capabilities.

- Save the prepared data in multiple sources, including Hadoop, BLOB, elastic search, etc.

Wrangle Data to Build and Schedule Intelligent Data Pipelines

- Perform various operations intelligently on data, including extraction, filter, transform, and group by filters

- Leverage the existing capabilities to create new features.

- Schedule and execute end-to-end data pipelines seamlessly on new datasets using scalable, distributed compute.

Handle Enterprise-scale Data Efficiently

- Utilize AI-driven in-memory distributed data virtualization to manage large-scale data instead of locally storing a copy.

- Prepare and process large-scale data as a horizontally scalable platform.

Scale Data Pipelines with In-built Data Connectors

- Save the prepared data in multiple sources, such as Hadoop, Blob, and other file systems

- Integrate multiple data formats, including relational, NewSql, NoSql, etc.

- Reuse visual data pipelines and share them further across the team and other users

Blend and Curate Enterprise Data with Ease

- Connect to diverse enterprise data sources including NoSQL and relational databases, BLOB storage, and real-time queues using in-built connectors.

- Prepare and curate data visually by applying joins across multiple datasets and data sources to create unified, analysis-ready datasets.

- Process massive-scale data efficiently using advanced, distributed extract, transform, and load (ETL) capabilities.

- Save the prepared data in multiple sources, including Hadoop, BLOB, elastic search, etc.

Wrangle Data to Build and Schedule Intelligent Data Pipelines

- Perform various operations intelligently on data, including extraction, filter, transform, and group by filters

- Leverage the existing capabilities to create new features.

- Schedule and execute end-to-end data pipelines seamlessly on new datasets using scalable, distributed compute.

Handle Enterprise-scale Data Efficiently

- Utilize AI-driven in-memory distributed data virtualization to manage large-scale data instead of locally storing a copy.

- Prepare and process large-scale data as a horizontally scalable platform.

Scale Data Pipelines with In-built Data Connectors

- Save the prepared data in multiple sources, such as Hadoop, Blob, and other file systems

- Integrate multiple data formats, including relational, NewSql, NoSql, etc.

- Reuse visual data pipelines and share them further across the team and other users