Introduction

Just as we communicate with each other by words, letters, or gestures, other living creatures have their form of communication as well. But did you ever ask yourself how an application does that?

Applications talk to each other using intermediary software, an API, or an Application Programming Interface.

For example, each time we listen to music via Spotify or binge-watch something on Netflix, we indirectly use an API.

API Overview

An Application Programming Interface (API) contains software-building tools, subroutine definitions as well as communication protocols that facilitate interaction between systems. An API may be for a database system, operating system, computer hardware, or web-based system.

An Application Programming Interface makes it simpler to use certain technologies to build applications for programmers. API can include specifications for data structures, variables, routines, object classes, remote calls, etc.

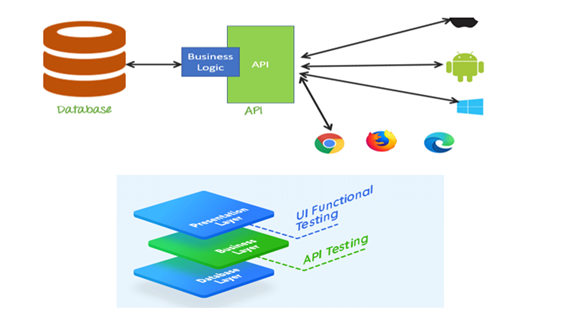

API Architecture

In Software application (app) development, API is the middle layer between the presentation (UI) and the database layer. APIs provide an abstraction to the internal business logic as they are not exposed to the world.

Are APIs affecting the business architecture and logic flow?

Nowadays, applications are getting more complex due to varying user needs. So, APIs have come into consideration to meet the business logic flow and its architecture.

Hence, API testing is now essential to test the core business logic and reduce functional bugs & security loopholes while testing an application.

API Testing

What do we need to test in the API? It is a big question as APIs are standalone and work together to make a system workable, so what is the best approach to test APIs?

The answer is standalone as well as integrated; For API testing, the tester needs to do testing of standalone APIs as well as integrated testing of all APIs, i.e., use the output of one API ins another as input and check results.

But can we do it manually? Yes, we can do it manually, but what will happen if APIs are larger in numbers and with complex functionalities, is it still possible to do API testing manually?

So, for this, we can reduce efforts and redundant cycles of testing APIs, i.e., Automated API testing.

Automated API testing is helpful because APIs are the nerves of any system, and they rarely change their behavior. If any change is there, then Automated tests will help us with that.

API Testing Background

Earlier, we used to do API testing with utilities developed by the dev team, this utility is only for JAVA API, and it communicates with the wrapper and sends a response back to the user.

Then the need came for REST and SOAP APIs, we used postman for REST and SOAP, and it was again manual; for multiple types of data, we used to pass multiple data one by one, and now we are using ReadyAPI for automated API testing, it provides the capability to do automated testing with a different type of data and checks results as per given assertions.

ReadyAPI tool supports REST and SOAP, with automated test capabilities with fewer efforts; in this tool, we also can-do security testing of APIs.

Challenges in API Testing

- The main challenges in API testing are Parameter Combination, Parameter Selection, and Call sequencing.

- No GUI is available to test the API, so entering input values is challenging.

- For testers, validating and verifying the response in various systems is difficult.

- Parameter selection and categorization are required to be known to the testers.

- The exception-handling function needs to be tested.

- Testers must be familiar with basic programming concepts.

Limitation or Risk if we test the APIs manually

More Time Required for Testing:

If the tester tests each & every API manually with all possible combinations & scenarios, then it would require more time to do the testing effectively & somehow, if there is a time & resource crunch, then testers can’t get sufficient time to focus on the more critical and higher priority tasks which need extensive critical thinking.

Executing the multiple tests cases in each build is a very tedious task:

We need to execute multiple test cases with different test data in each build, so if our API automation suite is ready; then it is easy to perform testing with a data-driven approach.

Data Field validation and boundary value analysis:

To check the data range and boundary value for each parameter used in API is a tedious task if the user tests the APIs manually, so once your test data is prepared with multiple combinations, it is easy to automate API and check all the possible scenarios.

Analysis of the behavior of APIs in Different environments:

To ensure the behavior of APIs is the same & meets the expectations of different environment combinations (Database or APP server) is itself a tiresome task.

Reusability of the same test suite is somehow not possible via API manual testing:

When we run test cases manually, it will require more time and manual resource involvement till the time each API is not tested, so it creates a dependency on manual resources & due to manual testing it is not somehow feasible to achieve the significant characteristic which should be present in every Test Suite which is Reusability.

Less accuracy:

When users test the APIs manually, there are more chances of missing test cases or scenarios.

How can we overcome these limitations & risks via Automated API Testing

API Automation Testing helps us test the APIs with various test data, especially when there are multiple parameters in an API & API functioning is itself complex in nature through its Data-Driven Approach.

Automation of APIs simplifies functional, integration, and regression testing every time new changes are made. API Automation provides Reusability & faster execution to your overall API testing process with minimal Manual resource effort.

Common library for repeating tests

We can create a standard library for the test to run repetitively and reuse these libraries for other projects.

Create frameworks for multiple projects

Framework for automation tests is beneficial, where we can define the structure, test cases, and shared libraries; we can reuse this for multiple projects.

Save test results

We can save our test results in tool history to compare results with previous ones and generate reports at any time.

Run specific tests as per our need

Using an automation tool, we can run selective test cases as per our need, saving time.

Detailed reports are available for management

Generate a detailed report for a test run, which we can save for future reference and present data to management.

Automated testing using Continuous integration

Using continuous integration, we can run the automated test once the new build is deployed on the server or any event which we can configure in Jenkins.

Key Metrics used for Measuring API test Automation Success

API automation plays an important role in maintaining software quality in a fast-paced Agile development environment.

However, API automation is also a huge investment. That investment could be wasted if there is no way to gauge the Automation’s effectiveness.

API Test automation metrics and KPIs offer a useful approach to assess the return on any investment, identify the test automation components that are and are not working, and make improvements to those components.

Here are good metrics to consider, depending on the context of the project and the team. These metrics reduce the ambiguity in measuring automation success and thereby provide more information that helps make decisions.

Time saved in Testing

One of the main reasons for building automated tests is to save valuable manual testing effort. While the automated tests repeat mundane testing tasks, testers can focus on the more critical and higher priority tasks, spend time exploring the application, and test modules that are hard to automate and need extensive critical thinking.

The amount of testing time saved is a good metric to assess the value provided to the team.

For example, in a 2week sprint, if the automated tests reduce the manual testing effort from 2 days to 4-5 hours, that is a major success for the team and the organization and results in money saved, so such measurements should be tracked and communicated.

Number of Risks mitigated

Testing of APIs needs to be prioritized based on risks. These could be unexpected events that would impact business, defect-prone areas of the application, or any past or future events that could affect the project & API behavior or working.

Ranking the risks from high to low priority is an effective method for evaluating the success of Automation regarding Risk. Automate API test cases by the severity of the risks, and keep note of how many risks the automated tests have successfully reduced.

Equivalent Manual Test Effort (EMTE)

Equivalent Manual Test Effort essentially helps us answer questions such as, “Are we better off after automating API’s project than before we started it manually?”

Automated tests’ inadequacy

Suppose the team spends around four months building a robust API automation framework, then spends more time maintaining the automated tests than actually using them to find defects; the entire effort is somehow not that fruitful.

Surprisingly, this is a common problem faced when working in a team; their automated tests are unstable and fail due to multiple factors. As a result, the team stopped trusting the automated API tests and eventually went back to testing API manually.

To handle such a situation, it is recommended to start automating several tests, run them constantly in each build, identify flaky tests and separate them from the stable tests. This approach helps to raise the value of automated API tests.

Percentage automated test coverage of total coverage

This metric reports on the percentage of test coverage achieved by automated testing as compared to manual testing. You calculate it by dividing automated coverage by total coverage.

This is a significant measure of the maturity of API test automation because it tracks how many of the APIs delivered to customers are covered by Automation as well as it can be used by management to assess the progress of a test automation initiative.

Percentage of API tests passed or failed

This metric counts the number of tests that have recently passed or failed as a percentage of total tests planned to run via Automation.

Counting the number of tests passed or failed gives an overview of testing progress. We can create a bar graph and a pie chart that shows passed test cases, failed tests, and tests that haven’t been run yet. By this, we can compare figures across different releases and days, which helps analyze the Automation testing progress & its stability.

Ease of using automation tools or framework

Teams often forget that their automated tests need to be low-maintenance, easy to run by anyone, have simple and understandable code, and give clear information about the tests that run in terms of passed and failed tests, logs, visual dashboards, screenshots, and more.

These metrics help change the mindset of teams to shift focus to the value of automated tests instead of arbitrary, simple-minded numbers and effort expended.

Number of Bugs found

To use the number of bugs found by automated tests as a measure of API Automation success also deserves careful interpretation.

For example, if the automation tests found 20 defects, it could mean the developers did not do their job correctly; and if there are zero defects found, it could mean the automation scripts weren’t effective. There are multiple ways to interpret these results.

Without careful analysis, they can easily steer the team’s mindset from the actual value of automated tests to misleading goals and benchmarks.

Continuous Improvement

Selecting the right metrics can help you evaluate the success of your automation efforts and provide direction for subsequent automation projects.

Pick a few precise metrics, start a small but effective automation project, observe how it goes, and keep getting better every day. After just a few cycles, you’ll find your software quality is so much better.

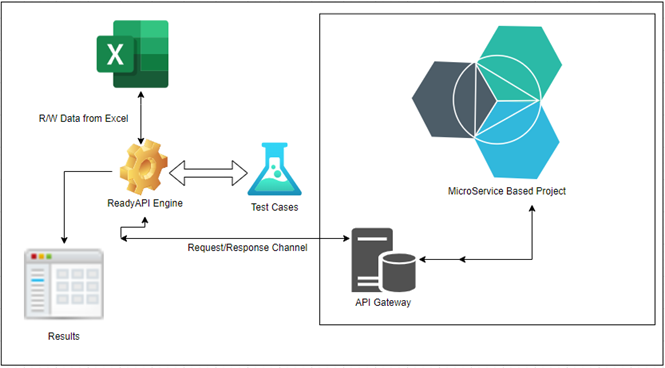

Automated API Testing summary in Microservice-based project

In our microservice-based project, we started with automated API testing using the ReadyAPI tool; we needed to test all the APIs in a standalone and integrated manner, so we used features of the ReadyAPI tool to achieve our testing goal.

Following is the list of features that we have majorly used to automate API testing using the ReadyAPI tool:-

Data Source & Data source loop

We utilized the Data Source & Data source loop features of ReadyAPI to incorporate a Data-driven approach in our Automated API testing.

Data Source is used to read data from the source file; for us, this file is an excel sheet & Data Source loop is used to execute the test cases in a loop with various test data in each iteration.

Assertion

We applied various types of Assertions to verify the status of executed test cases and whether it should be passed or failed after comparing them with the expected behavior of API.

Reporting

We used Reporting feature to generate reports with sufficient details of executed test cases which would help compare the results in successive builds or releases for the same test cases.

Data Sink

We utilized the Data Sink feature to write run-time values of some parameters from the response of successfully executed API in an excel sheet which would be used as input for other APIs to achieve Integrated API Testing.

Configure Environments

We used Configure Environments feature of the ReadyAPI tool to configure different environments, such as QA, Dev, etc, with its endpoints & specifications at a single place & utilized the same in the overall test project so that the same test project would be run on any environment just after switching the environment or if any change required in specifications of environment, then it would require only single place change & reflected in complete Test project without any change in API structure & functional test cases.

Preferences

We used the Preferences feature to execute an automated test project according to our required preferences & settings.

Property, Property Transfer

We utilized Property & Property Transfer feature to fetch the value of a parameter or property from the response of an API & to transfer it after storing its values in a variable for further use in another API.

Delay

We utilized the Delay feature to provide some wait in executing successive test cases by the specified number of milliseconds to achieve real-time behavior & other resource consumption.

Conditional GoTo

We included the Conditional Goto feature of the ReadyAPI tool to execute or skip the dependent test cases based on the defined condition.

TestRunner

We explored & used TestRunner to run the automated test project created in the ReadyAPI tool from the command line & to integrate our automated test project with CI/CD pipeline through TestRunner integration with Jenkins.

The following picture gives an idea about our API automation testing approach, which we have described above:

We have also utilized the ReadyApi tool to run security tests of our automated APIs which helps us to find out the security loop wholes categorized in Error, Alert & Warning & provides support to generate reports of security run, including all the necessary details.

Conclusion

As you all know, manual testing takes time, and it always takes the same effort even if we need to do the same testing again and again, by automating test cases, we have reduced our efforts for future test runs.

Automation tools provide flexibility to run the test multiple times, it was a one-time effort for us, and now we can run the automation suite any time without much effort.

Initially, we faced many challenges in the Automation of APIs, but once our framework for API testing was ready, the process got sped up, and we automated around 150 APIs using the ReadyAPI tool.

In our next release of a microservice-based product, we are changing some of the APIs and running regression tests for other APIs to check the impact of changes which is completely automated.

We planned to save around one month in this release; in subsequent releases, this time will be much less than the first time. So, it benefited the company and the team to complete tasks on time.

You might be interested in

22 Jan, 2026

Why storing everything is not the optimal email archiving strategy for 2026